It’s always a great pleasure to talk with one of the founding fathers of cybernetics and computation. One of the benefits of AI and robotics being relatively young fields is that many of the people who created them are not only still alive, but are still very active participants. It’s as if we can discuss democracy with Thomas Jefferson or physics with Albert Einstein, and we should never miss the opportunity.

These originators often have very different perspectives on the technology they helped create than that of the modern mainstream. They usually take a broader view of how tech will impact society instead of looking at technological progress only for the sake of progress. The venture capitalists, Silicon Valley billionaires, and start-up founders have their own interests, corporate and personal, that the elder sages have transcended. It doesn’t mean they are always right, of course, but it does mean we should listen to their voices whenever we can.

I’ve been lucky enough to meet quite a few of these luminaries, from inventor Ray Kurzweil to computer scientist Leonard Kleinrock to UNIX co-creator Ken Thompson, who are directly or indirectly responsible for much of the technology you’re using to read this article right now. In 2016, while we were both speaking at an AI conference in Oxford, I was happy to add another big fish to my “league of legends,” Noel Sharkey of the University of Sheffield. A professor of AI and robotics who also holds a Ph.D. in psychology, he became famous for his entertaining expertise on the UK television show “Robot Wars.” Noel is also a powerful political advocate whose focus in recent years is making sure the “human” isn’t forgotten in “human rights” with his Foundation for Responsible Robotics and his campaigns at the United Nations and other bodies regarding autonomous killing machines.

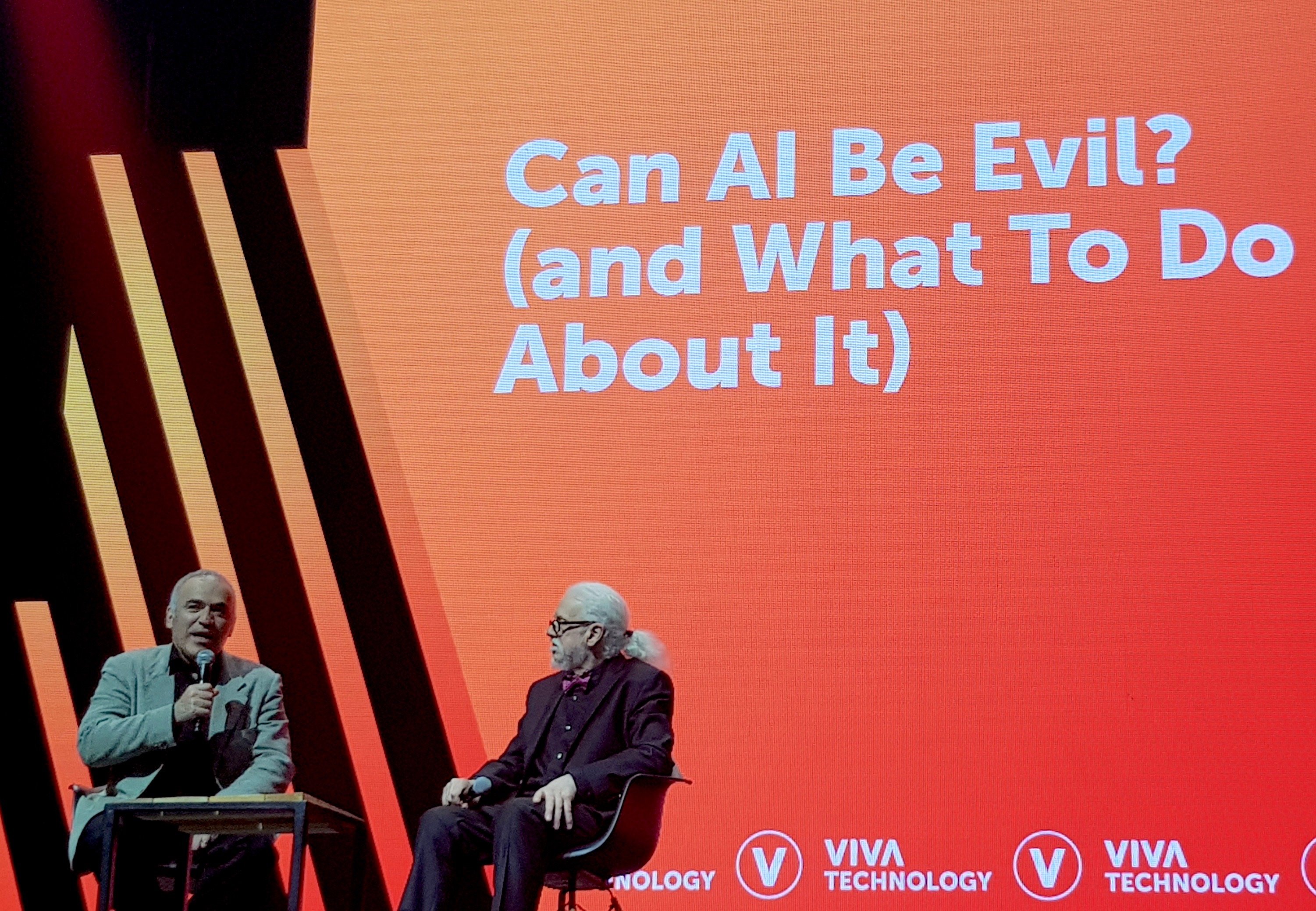

As such, he was an ideal partner for a conversation on the main stage of this year’s VivaTech event in Paris this May. The question before us was a simple one: Can AI be evil? To save time, we both answered right at the start with a spoiler alert: “No!” But of course there was a “but…” after that, or it would have been a very brief conversation.

We started with a detour, one that proved to be an illuminating contrast, as is often the case when talking with someone as multi-faceted as Noel. Referring to my personal history battling against chess machines – compared to my current advocacy for the human-plus-intelligent-machine relationship – he said he was still bitter about my loss to IBM’s Deep Blue back in 1997. I mostly managed to purge those demons myself in writing my 2017 book “Deep Thinking.” So it was a little amusing to hear Noel’s anger on my behalf – and on behalf of the AI community. Many in that group felt the historical science aspect of the human-machine chess contest, which went back to computer scientist Alan Turing himself, had been abandoned in favor of the purely competitive one. (As I acknowledged in my book, this was IBM’s right and my fault for underestimating what this dramatic shift in emphasis would mean.)

One specific issue Noel mentioned presents a relevant irony to our encounters with far more intelligent machines today. He pointed out that because Deep Blue had a network connection, there would never be a way to be 100% sure that fair play was being honored, even while you also couldn’t prove it wasn’t. This lack of transparency was the main issue, not any particular allegation of impropriety.

Just over 20 years later, those same issues are at the forefront of the debates of AI and “ethical AI,” but now in reverse. Instead of making sure intelligent machines today are completely autonomous, as with a chess computer, now we want to ensure that they are not! That is, to guarantee that no machine is making life-changing, or life-taking, decisions without human intervention and oversight.

It’s a common misconception that machines don’t make mistakes. Even in closed systems like chess, where a free app on your phone is far stronger than any human (and Deep Blue), they aren’t perfect. But in such systems they are inevitably better, and that matters quite a lot, whether its chess or in relatively open systems like cancer diagnosis or driving a car. These practical implications have little to do with artificial general intelligence (AGI), which rivals the human ability to learn and understand in context. As Noel put it in our conversation, saying a machine is “smarter” than us when it can’t have a conversation or make you a cup of tea is abusing the term.

The mythical storytelling power of super-intelligent machines, the singularity and AGI all distract us from the real dangers and concerns facing us today. Our intelligent tools will help us overcome our challenges if we are creative and ambitious with them. They aren’t the Terminator, but they aren’t a magic wand, either. They will empower us, for better and for worse.

Complaining about bias in AI is like complaining about the image in the mirror.

As I like to put it, complaining about bias in AI is like complaining about the image in the mirror. Distorting it doesn’t fix the problem. Our algorithms, no matter how sophisticated, will always reflect our own image. This doesn’t mean they aren’t useful in exposing that bias and finding many useful things in a sea of data. But it should mean we cannot pretend to pass human responsibility and accountability over to algos.

‘Augmented intelligence’ instead of artificial intelligence

It’s easier to see AI as a tool if you call it “augmented intelligence” instead of the scary and vague term “artificial intelligence.” Noel shared that he spoke with the man who coined the term “artificial intelligence” in 1956, John McCarthy, about its legacy. McCarthy told him that he wished he’d never used it, because it caused too much confusion! But four years earlier, McCarthy said, he and John von Neumann called it “complex automata theory” and nobody was interested. But as soon as it was called “artificial intelligence” it became a popular sensation!

Confusion and fear are enemies of progress. As Noel said, there are plenty of real concerns about AI, from algorithmic prison sentencing to biased facial recognition to weapons systems that select their own targets without human intervention. No company or country wants to fall behind in the technology race, but this can mean bad news for the only race that really matters: the human race.

We ended on an optimistic note – despite the public obsession with the dystopian fantasies around robots and smarter tech they will continue to be a boon overall, making us more productive, healthier, and more prosperous. Noel ended with an unlikely quote, paraphrasing the American president, “Make humans great again!”